Fusing Reasoning Models, Generative AI & LLMs: The Winning Formula

Why This Trio Excels:

- Language Fluency (LLMs): Delivers natural, context-aware text generation and understanding.

- Logical Rigor (Reasoning Models): Ensures sound, step-by-step problem solving and adherence to formal constraints.

- Autonomous Control (Agentic Frameworks): Coordinates tasks, invokes submodels, and manages decision flows without human intervention.

The models

By orchestrating three complementary AI paradigms—reasoning engines, generative networks, and large language models—we unlock systems that are both creatively fluent and rigorously reliable.

Example Architecture:

LLM (e.g., GPT):

Handles natural language tasks like understanding queries, generating responses, summarizing data.

Reasoning Engine:

Takes over for tasks that need logic, such as math, planning steps, verifying facts.

Agentic Framework (e.g., AutoGPT, LangChain, OpenAI Function Calling): Coordinates everything. It can:

Break a goal into subtasks:

- Decide when to ask the LLM for help

- Trigger the reasoning module when logic is required

- Store results in memory or a database

- Adapt behaviour over time

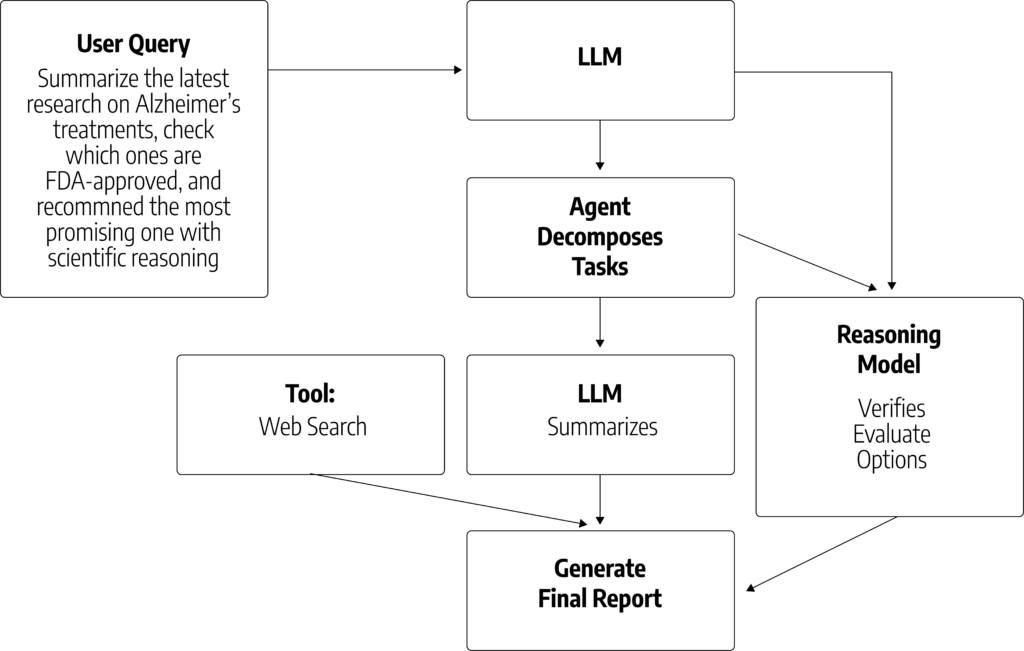

Scenario: AI Agent Solving a Research Task

Let’s walk through an example workflow of how an Agentic AI might operate – deciding when to use Large Language Model (LLM) for text understanding/generation, Reasoning Model for logic-heavy tasks and external tools/APIs for specialized functions like search or math.

Summarize the latest research on Alzheimer’s treatments, check which ones are FDA-approved, and recommend the most promising one with scientific reasoning.

User Query

LLM is used to parse the request, recognize subtasks:

- Get latest Alzheimer’s research

- Identify FDA-approved treatments

- Analyze & recommend best one

- LLM used for task decomposition

The agent uses a web search tool/API to gather recent publications or clinical trial data.

External tool used for data retrieval.

LLM is prompted to generate a summary of the top research papers.

LLM used for summarization & synthesis.

The agent uses an FDA database API, or structured data source. A Reasoning Model or logic component compares drug names against approval status.

Reasoning Model + Tool used for fact-checking.

LLM proposes a candidate.

Reasoning Model performs a logical evaluation efficacy, trial phases, side effects, approval status.

The agent ensures the recommendation is based on evidence, not just text fluency.

LLM + Reasoning Model collaborate on decision-making.

LLM crafts a clear, professional response in natural language, citing sources and reasoning steps.

LLM used for final output formatting.

Why This Hybrid Setup Works