AI – Demystified and Delivered

AI is revolutionizing the world

– we're revolutionizing AI

AI is reshaping every industry—and now we’re redefining its power. Our mission is to democratize AI, generative and agentic AI as well as reasoning models, delivering maximum profitability for customers while minimizing environmental and societal impact.

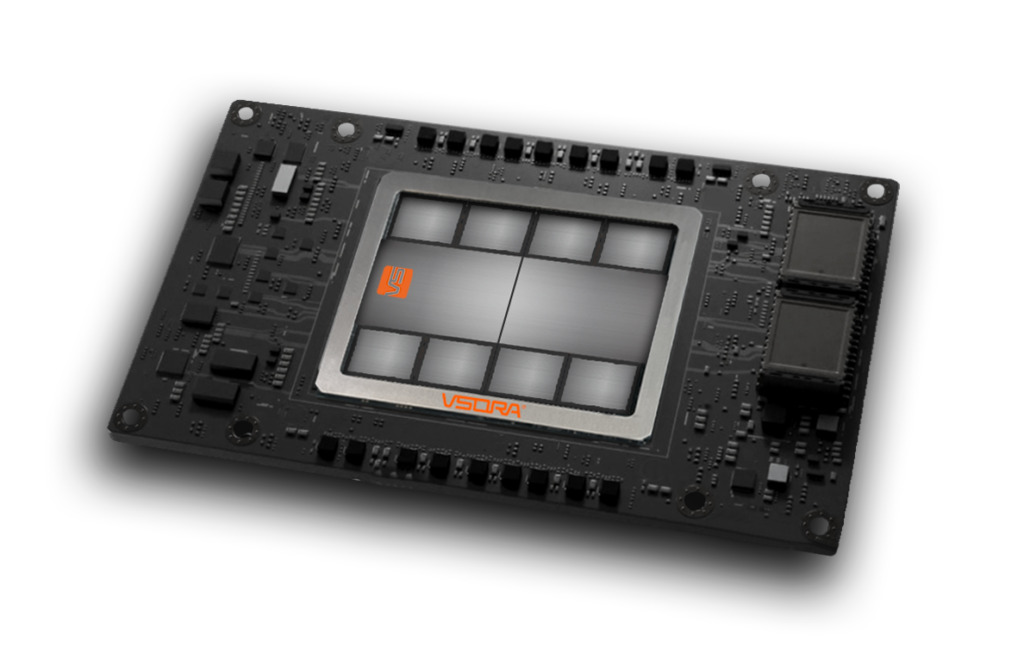

Unlike conventional accelerators built for training, VSORA’s Jotunn 8 is engineered exclusively for inference. By optimizing latency-sensitive workloads, it achieves lightning-fast response times, dramatically higher throughput, and a significantly lower cost-per-query.

Discover how Jotunn8 makes AI more accessible, efficient, and sustainable than ever.

We are VSORA

VSORA is a French fabless semiconductor company delivering ultra-high-performance AI inference solutions for both data centers and edge deployments. Our proprietary architecture achieves exceptional implementation efficiency, ultra-low latency, and minimal power draw—dramatically cutting inference costs across any workload.

Fully programmable and agnostic to both algorithms and host processors, our chips serve as versatile companion platforms. A rich instruction set lets them seamlessly handle pure AI, pure DSP, or any hybrid of the two, all without burdening developers with extra complexity.

To streamline development and shorten time-to-market, VSORA embraces industry standards: our toolchain is built on LLVM and supports common frameworks like ONNX and PyTorch, minimizing integration effort and customer cost.

A European AI Inference Chip Provider

Join VSORA!

Drive the Future of Generative AI

Our rapid growth is powered by a talented, multicultural team united by pride in our work and a commitment to helping everyone shine. We’ve traded rigid hierarchies for a relaxed, friendly atmosphere where dedication, ownership, collaboration, agility, and engagement guide everything we do. For us, work isn’t a checklist—it’s a shared mission. You’re empowered to shape a leading company and celebrate in its success.