SUPERCHIP

SUPERCHIP (Scalable Unified Processor Enhancing Revolutionary Computing) is our answer to the surging demand for real-time supercomputing across edge AI, autonomous driving, generative AI, and decentralized AIoT applications. Funded under the HORIZON-EIC-2023-ACCELERATOR-01 call, this project tackles the exponential growth of sensor data and AI workloads that today’s semiconductor providers struggle to serve.

The Problem

Modern automation—especially Level 4/5 autonomous driving—relies on ingesting vast streams of sensor inputs and running complex algorithms to maintain a dynamic, 360° perception model in under 20 ms. Yet no commercially available solution can efficiently combine AI inference and digital signal processing (DSP) at that speed and scale. Current multi-chip assemblies introduce high latency, wasted silicon utilization (often under 20 percent), and excessive power draw, undermining both performance and sustainability. To achieve true autonomy and maximum safety, the industry must deliver enormous compute throughput, ultra-low latency, minimal energy consumption, seamless AI-DSP integration, deterministic execution, in-field programmability, and cost-effectiveness in a single platform.

Our Solution

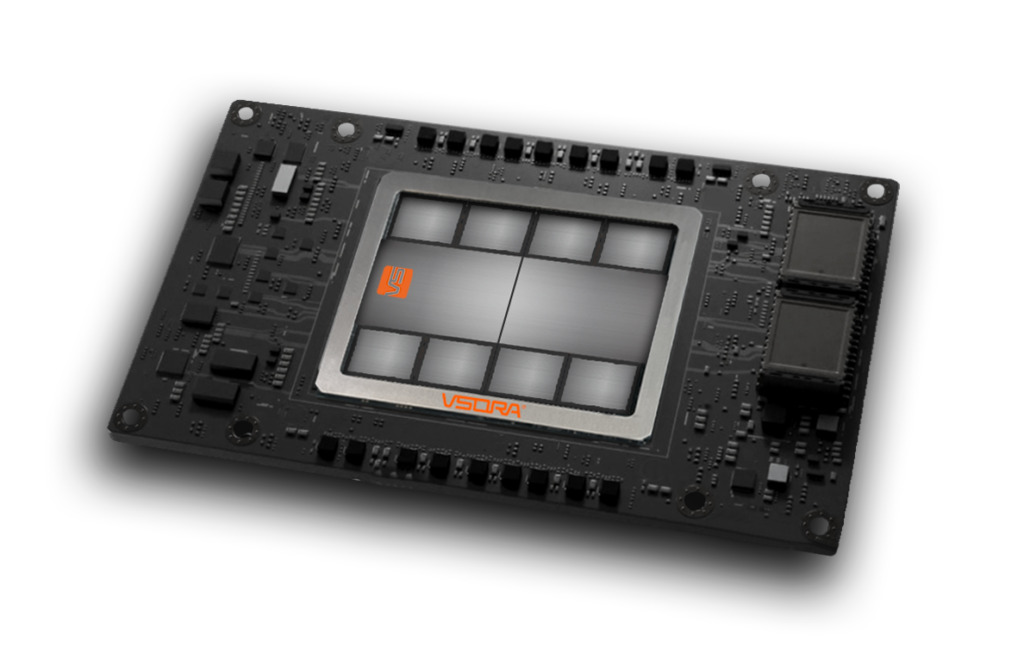

Our companion chip, TYR, revolutionizes this space by adding “extra brains” to conventional processors. The TYR family (TYR1, TYR2, TYR4) plugs into a central compute unit and operates semi-autonomously, asynchronously processing data alongside the main CPU. Each chip delivers between 400 and 1,600 TFlops of raw performance with 70–80 percent implementation efficiency, all with very low power consumption and with a silicon footprint small enough to keep costs competitive. Fully programmable in high-level languages and agnostic to host algorithms, Tyr transforms how AI and DSP tasks coexist—unlocking real-time, context-aware processing that makes Level 4/5 autonomy, green transitions, and next-generation AI applications commercially viable. SUPERCHIP is our answer to the surging demand for real-time supercomputing across edge AI, autonomous driving, generative AI, and decentralized AIoT applications. Funded under the HORIZON-EIC-2023-ACCELERATOR-01 call, this project tackles the exponential growth of sensor data and AI workloads that today’s semiconductor providers struggle to serve.

Co-funded by the European Union. Views and opinions expressed are however those of the author(s) only and do not necessarily reflect those of the European Union. Neither the European Union nor the granting authority can be held responsible for them.