Unlocking the Power of Edge AI

Where efficiency meets innovation

Bringing Intelligence Closer to the Action with Edge AI

In today’s world, real-time decision-making is critical — and that’s where Edge AI delivers. Unlike traditional cloud-based AI, Edge AI processes data directly where it’s generated — on devices, machines, and sensors — enabling instant insights, lower latency, enhanced privacy, and reduced bandwidth costs. From autonomous vehicles to smart factories, Edge AI empowers industries to operate faster, smarter, and more securely. Tyr is built to unlock the full potential of Edge AI, delivering data-center-class performance in an ultra-efficient, compact form — bringing advanced AI capabilities to the very edge of your operations.

What Sets Edge AI Apart?

Local processing

Real-Time Intelligence

Enhanced Security & Compliance

Lower Latency & Bandwidth Usage

Built for the Edge

Cost-Effective & Sustainable

Data Center-Class

– AI at the Edge

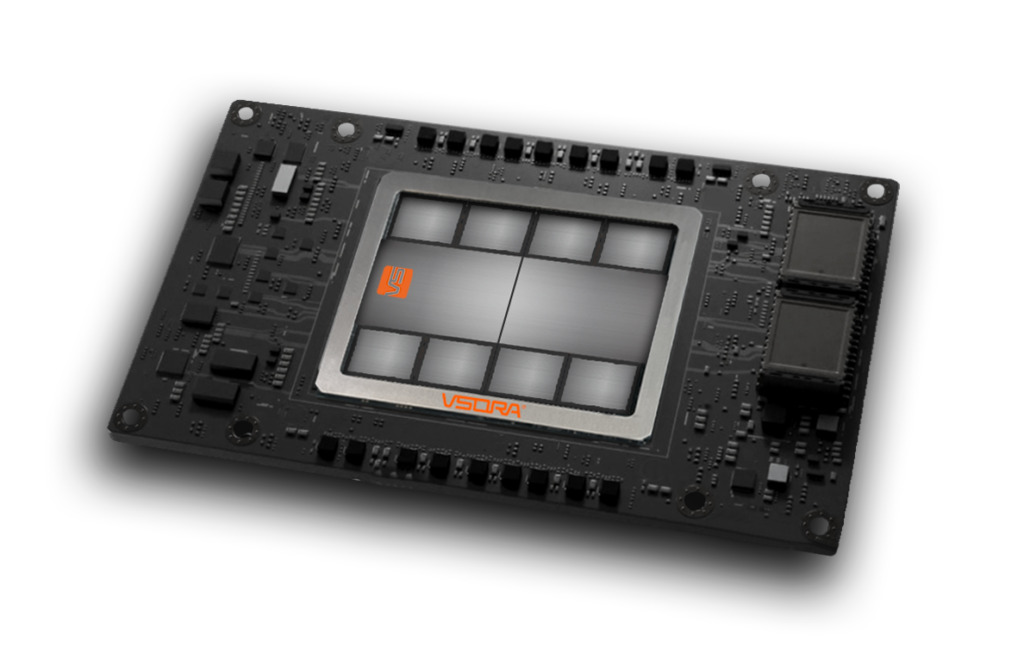

Our Tyr family of processors delivers up to 1,600 Tflops of Edge AI performance—approaching data center levels of compute power, but with dramatically lower energy consumption. This unlocks new possibilities in areas that once seemed out of reach for edge computing.

Experience AI at the edge—faster, safer, and smarter.

Real-World Use Cases:

Edge AI in Action

Edge AI is reshaping industries by enabling fast, secure, and intelligent decision-making—right where it’s needed most. Here’s how it’s making an impact:

Autonomous Vehicles

Edge AI processes real-time sensor data to create an immediate understanding of the vehicle’s surroundings. This insight powers critical decisions and actions within milliseconds—essential for safe navigation. With data cycles as fast as ~30ms, vehicles require ultra-low latency and high compute performance. Edge AI is the key—and Generative AI is beginning to play a role in enabling smarter navigation and prediction systems.

Robotics & Industrial Automation

Robots are increasingly autonomous, operating in dynamic environments where immediate reactions are crucial. A striking example is space robotics like the Mars rover, which must function without relying on Earth-based decisions. In factory settings, robots must react in milliseconds for tasks like precision handling or quality control. Federated learning allows these robots to continually adapt, updating models based on local conditions without needing constant connectivity.

Edge IoT Gateways in Smart Manufacturing

In modern industrial plants, 2,000+ machines can generate over 2,200 TB of data per month. Transmitting all that data to the cloud? Impractical. Edge AI enables local data filtering, compression, and analysis—right at the machine—driving faster insights and lower costs.

Examples include:

- Preventive maintenance through machine log analysis

- Real-time quality inspection on high-speed lines

- On-premise video analysis, where sensitive footage is kept local and only encrypted summaries leave the site

Edge AI = Real-Time Intelligence

+ Lower Costs + Greater Security

From smart factories to autonomous systems, Edge AI is unlocking powerful capabilities that were once impossible due to latency, bandwidth, or privacy constraints.

Bring AI to the edge—and bring your systems to the next level.