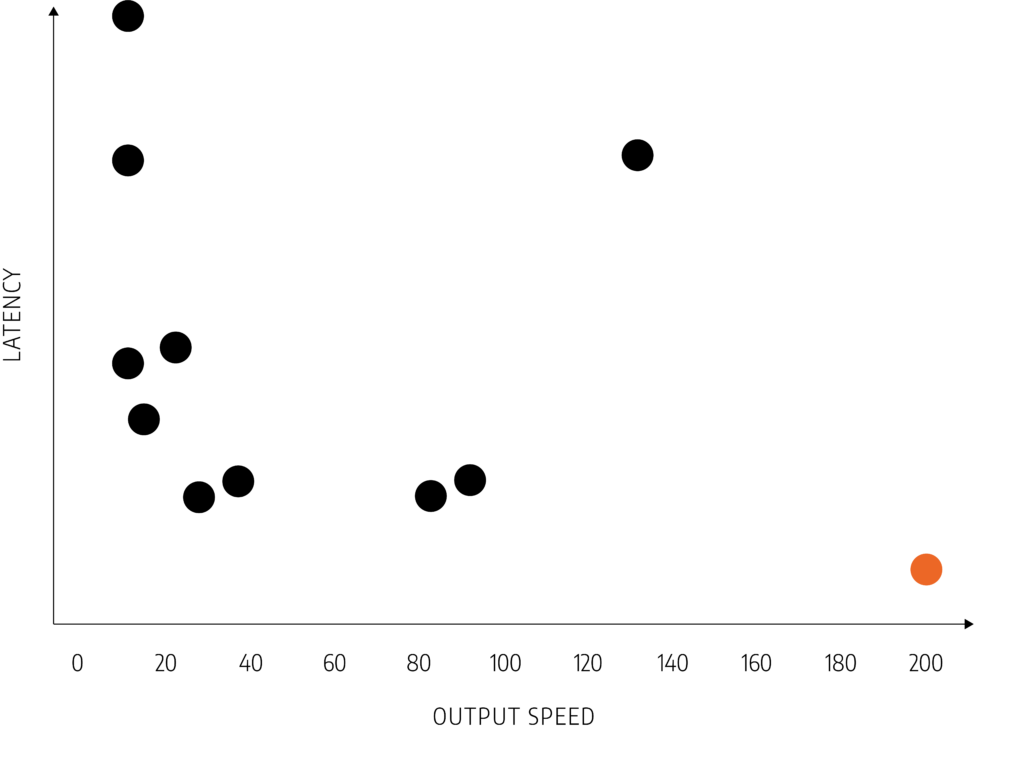

In modern data centers, success means deploying trained models with blistering speed, minimal cost, and effortless scalability. Designing and operating inference systems requires balancing key factors such as high throughput, low latency, optimized power consumption, and sustainable infrastructure. Achieving optimal performance while maintaining cost and energy efficiency is critical to meeting the growing demand for large-scale, real-time AI services across a variety of applications.

Unlock the full potential of your AI investments with our high-performance inference solutions. Engineered for speed, efficiency, and scalability, our platform ensures your AI models deliver maximum impact—at lower operational costs and with a commitment to sustainability. Whether you’re scaling up deployments or optimizing existing infrastructure, we provide the technology and expertise to help you stay competitive and drive business growth.

This is not just faster inference. It’s a new foundation for AI at scale.

In the world of AI data centers, speed, efficiency, and scale aren’t optional—they’re everything. Jotunn8, our ultra-high-performance inference chip is built to deploy trained models with lightning-fast throughput, minimal cost, and maximum scalability. Designed around what matters most—performance, cost-efficiency, and sustainability—they deliver the power to run AI at scale, without compromise!

Why it matters: Critical for real-time applications like chatbots, fraud detection, and search.

Reasoning models, Generative AI and Agentic AI are increasingly being combined to build more capable and reliable systems. Generative AI provide flexibility and language fluency. Reasoning models provide rigor and correctness. Agentic frameworks provide autonomy and decision-making. The VSORA architecture enables smooth and easy integration of these algorithms, providing near-theory performance.

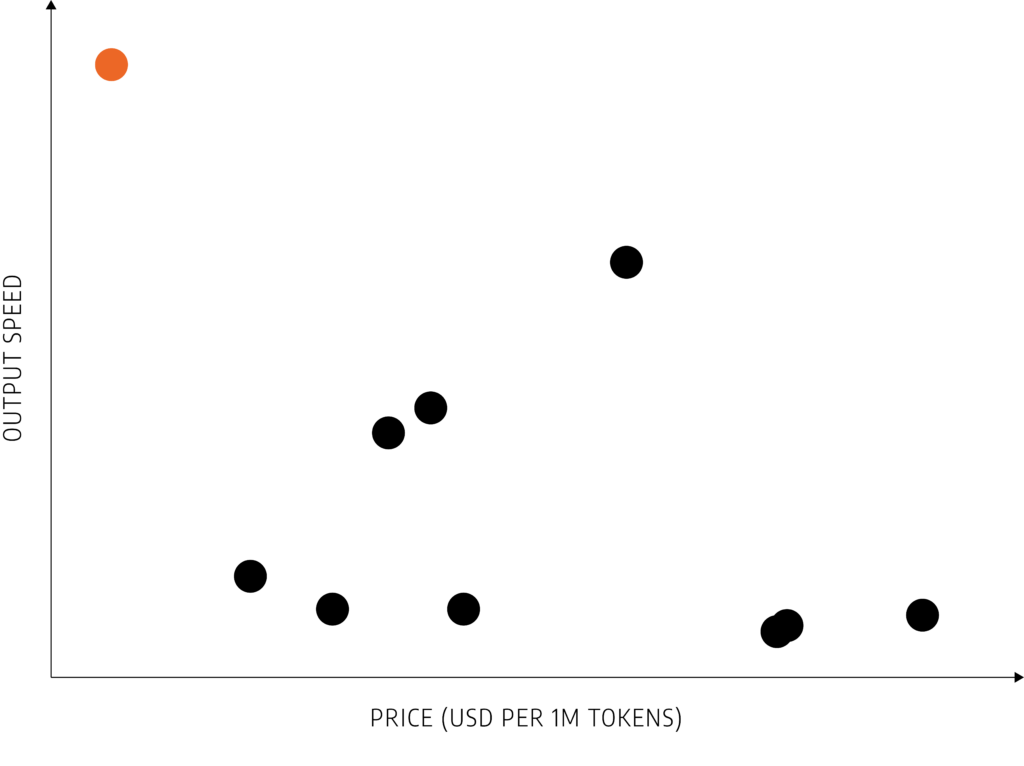

Why it matters: AI inference is often run at massive scale – reducing cost per inference is essential for business viability.

Unmatched Performance at the Edge with Edge AI.

Fully programmable

Algorithm agnostic

Host processor agnostic

RISC-V core to offload & run AI completely on-chip

Tyr 4

fp8: 1600 Tflops

fp16: 400 Tflops

Tyr 2

fp8: 800 Tflops

fp16: 200 Tflops

Tyr 4

fp8/int8: 50 Tflops

fp16/int16: 25 Tflops

fp32/int32: 12 Tflops

Tyr 2

fp8/int8: 25 Tflops

fp16/int16: 12 Tflops

fp32/int32: 6 Tflops

Close to theory efficiency

Fully programmable

Algorithm agnostic

Host processor agnostic

RISC-V cores to offload host

& run AI completely on-chip.

fp8: 3200 Tflops

fp16: 800 Tflops

fp8/int8: 100 Tflops

fp16/int16: 50 Tflops

fp32/int32: 25 Tflops

Close to theory efficiency