The Ultimate

AI Chip

Where efficiency meets innovation

Introducing the World’s Most Efficient AI Inference Chip

In modern data centers, success means deploying trained models with blistering speed, minimal cost, and effortless scalability. Designing and operating inference systems requires balancing key factors such as high throughput, low latency, optimized power consumption, and sustainable infrastructure. Achieving optimal performance while maintaining cost and energy efficiency is critical to meeting the growing demand for large-scale, real-time AI services across a variety of applications.

Unlock the full potential of your AI investments with our high-performance inference solutions. Engineered for speed, efficiency, and scalability, our platform ensures your AI models deliver maximum impact—at lower operational costs and with a commitment to sustainability. Whether you’re scaling up deployments or optimizing existing infrastructure, we provide the technology and expertise to help you stay competitive and drive business growth.

This is not just faster inference. It’s a new foundation for AI at scale.

Ultra-low Latency

Very High Throughput

Cost Efficient

Power Efficient

French Tech: Vsora designs chips for AI - 12/05

Khaled Maalej, co-founder and CEO of Vsora, was Laure Closier’s guest on French Tech this Monday, May 12. He discussed his company’s goals, which aim to produce artificial intelligence chips that offer unprecedented performance, consume less energy, and are more affordable than those of the world’s leading European companies, on Good Morning Business.

AI – Demystified and Delivered

In the world of AI data centers, speed, efficiency, and scale aren’t optional—they’re everything. Jotunn8, our ultra-high-performance inference chip is built to deploy trained models with lightning-fast throughput, minimal cost, and maximum scalability. Designed around what matters most—performance, cost-efficiency, and sustainability—they deliver the power to run AI at scale, without compromise!

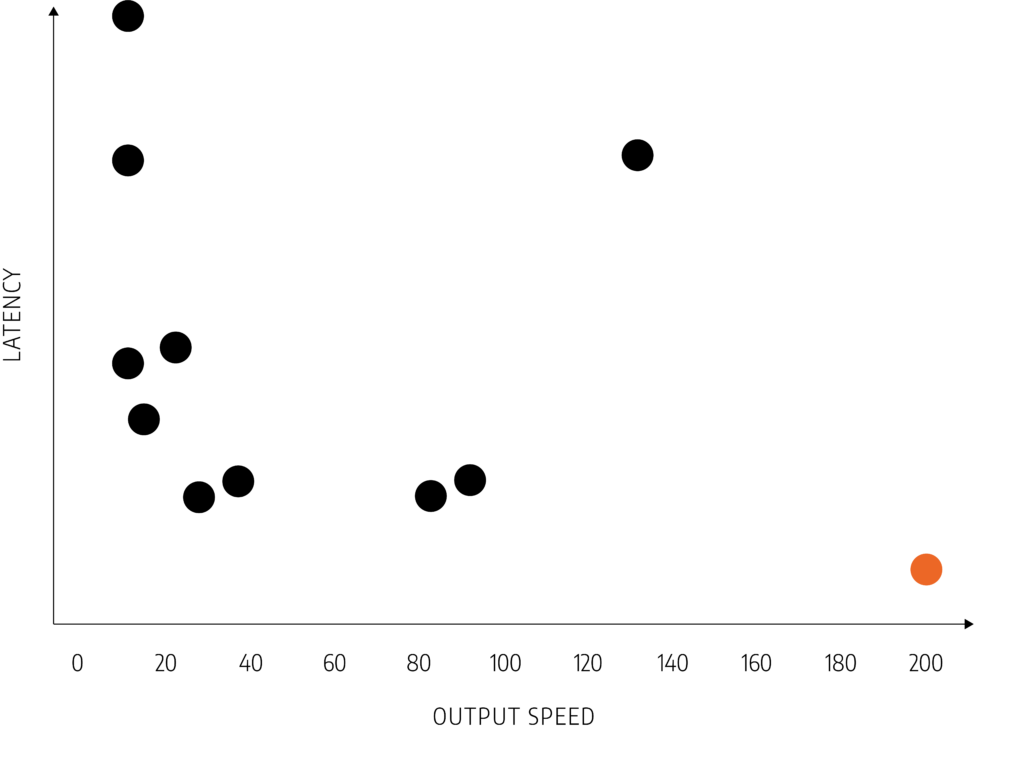

Jotunn 8 Outperforms the Market

Why it matters: Critical for real-time applications like chatbots, fraud detection, and search.

Different Models, Different Purposes – Same Hardware

Reasoning models, Generative AI and Agentic AI are increasingly being combined to build more capable and reliable systems. Generative AI provide flexibility and language fluency. Reasoning models provide rigor and correctness. Agentic frameworks provide autonomy and decision-making. The VSORA architecture enables smooth and easy integration of these algorithms, providing near-theory performance.

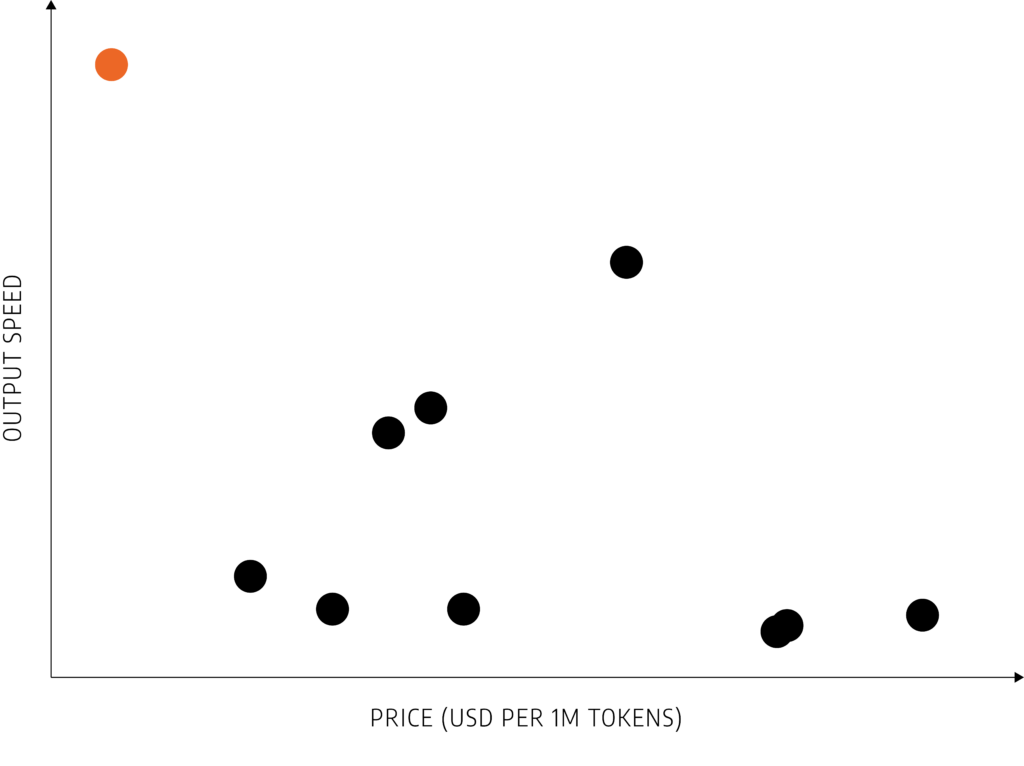

More Speed For the Bucks

Why it matters: AI inference is often run at massive scale – reducing cost per inference is essential for business viability.

Unlocking the Power of Edge AI

In today’s world, real-time decision-making is critical — and that’s where Edge AI delivers. Unlike traditional cloud-based AI, Edge AI processes data directly where it’s generated — on devices, machines, and sensors — enabling instant insights, lower latency, enhanced privacy, and reduced bandwidth costs. From autonomous vehicles to smart factories, Edge AI empowers industries to operate faster, smarter, and more securely. Tyr is built to unlock the full potential of Edge AI, delivering data-center-class performance in an ultra-efficient, compact form — bringing advanced AI capabilities to the very edge of your operations.